Append a new batch of data#

We have one file in storage and are about to receive a new batch of data.

In this notebook, we’ll see how to manage the situation.

import lamindb as ln

import lnschema_bionty as lb

import readfcs

lb.settings.species = "human"

💡 loaded instance: testuser1/test-facs (lamindb 0.54.2)

ln.track()

💡 notebook imports: anndata==0.9.2 lamindb==0.54.2 lnschema_bionty==0.31.2 pytometry==0.1.4 readfcs==1.1.6 scanpy==1.9.5

💡 Transform(id='SmQmhrhigFPLz8', name='Append a new batch of data', short_name='facs1', version='0', type=notebook, updated_at=2023-09-27 19:03:47, created_by_id='DzTjkKse')

💡 Run(id='iKuM6oyOKGVBDvx0YQ7P', run_at=2023-09-27 19:03:47, transform_id='SmQmhrhigFPLz8', created_by_id='DzTjkKse')

Ingest a new file#

Access  #

#

Let us validate and register another .fcs file:

filepath = ln.dev.datasets.file_fcs()

adata = readfcs.read(filepath)

adata

AnnData object with n_obs × n_vars = 65016 × 16

var: 'n', 'channel', 'marker', '$PnB', '$PnR', '$PnG'

uns: 'meta'

Transform: normalize  #

#

import anndata as ad

import pytometry as pm

pm.pp.split_signal(adata, var_key="channel")

pm.tl.normalize_arcsinh(adata, cofactor=150)

adata = adata[ # subset to rows that do not have nan values

adata.to_df().isna().sum(axis=1) == 0

]

adata.to_df().describe()

| KI67 | CD3 | CD28 | CD45RO | CD8 | CD4 | CD57 | CD14 | CCR5 | CD19 | CD27 | CCR7 | CD127 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| count | 64593.000000 | 64593.000000 | 64593.000000 | 64593.000000 | 64593.000000 | 64593.000000 | 64593.000000 | 64593.000000 | 64593.000000 | 64593.000000 | 64593.000000 | 64593.000000 | 64593.000000 |

| mean | -7.784467 | -7.958064 | -7.880424 | -7.849991 | -7.682381 | -7.695841 | -7.772347 | -7.827088 | -7.427381 | -7.693235 | -8.009255 | -7.514956 | -7.471545 |

| std | 30.911205 | 30.796326 | 30.847746 | 30.776819 | 30.846949 | 30.873545 | 30.907915 | 30.640249 | 30.767073 | 30.675623 | 30.902098 | 30.668348 | 30.830299 |

| min | -62.628761 | -62.628761 | -62.628761 | -62.628761 | -62.628761 | -62.628761 | -62.628761 | -62.628761 | -62.628761 | -62.628761 | -62.628761 | -62.628761 | -62.628761 |

| 25% | -0.009892 | -0.009892 | -0.009892 | -0.009892 | -0.009892 | -0.009892 | -0.009892 | -0.009892 | -0.009892 | -0.009892 | -0.009892 | -0.009892 | -0.009892 |

| 50% | -0.000321 | -0.000322 | -0.000322 | -0.000322 | -0.000321 | -0.000322 | -0.000321 | -0.000322 | -0.000321 | -0.000322 | -0.000322 | -0.000321 | -0.000321 |

| 75% | 1.086298 | 1.045244 | 0.819897 | 1.050630 | 1.104099 | 0.987080 | 0.995414 | 1.041992 | 1.145463 | 0.932001 | 1.096484 | 1.150226 | 1.248759 |

| max | 84.386696 | 84.386627 | 84.385368 | 84.398567 | 84.405098 | 84.398537 | 84.402496 | 84.398567 | 84.337654 | 84.382713 | 84.402489 | 84.362930 | 84.374611 |

Validate cell markers  #

#

Let’s see how many markers validate:

validated = lb.CellMarker.validate(adata.var.index)

❗ 7 terms (53.80%) are not validated for name: KI67, CD45RO, CD4, CD14, CCR5, CD19, CCR7

Let’s standardize and re-validate:

adata.var.index = lb.CellMarker.standardize(adata.var.index)

validated = lb.CellMarker.validate(adata.var.index)

❗ found 1 synonym in Bionty: ['KI67']

please add corresponding CellMarker records via `.from_values(['Ki67'])`

❗ 3 terms (23.10%) are not validated for name: Ki67, CD45RO, CCR5

Next, register non-validated markers from Bionty:

records = lb.CellMarker.from_values(adata.var.index[~validated])

ln.save(records)

Now they pass validation:

validated = lb.CellMarker.validate(adata.var.index)

assert all(validated)

Register  #

#

modalities = ln.Modality.lookup()

features = ln.Feature.lookup()

efs = lb.ExperimentalFactor.lookup()

species = lb.Species.lookup()

markers = lb.CellMarker.lookup()

file = ln.File.from_anndata(

adata,

description="Flow cytometry file 2",

field=lb.CellMarker.name,

modality=modalities.protein,

)

/opt/hostedtoolcache/Python/3.9.18/x64/lib/python3.9/site-packages/anndata/_core/anndata.py:1230: ImplicitModificationWarning: Trying to modify attribute `.var` of view, initializing view as actual.

df[key] = c

... storing '$PnR' as categorical

/opt/hostedtoolcache/Python/3.9.18/x64/lib/python3.9/site-packages/anndata/_core/anndata.py:1230: ImplicitModificationWarning: Trying to modify attribute `.var` of view, initializing view as actual.

df[key] = c

... storing '$PnG' as categorical

❗ 3 terms (100.00%) are not validated for name: FSC-A, FSC-H, SSC-A

❗ no validated features, skip creating feature set

file.save()

file.labels.add(efs.fluorescence_activated_cell_sorting, features.assay)

file.labels.add(species.human, features.species)

file.features

Features:

var: FeatureSet(id='5kXx8o5DYLzcErTvRUOe', n=13, type='number', registry='bionty.CellMarker', hash='cInZdHy3fspNNLGysq01', updated_at=2023-09-27 19:03:52, modality_id='Pa89rywu', created_by_id='DzTjkKse')

'CD27', 'CD8', 'Ccr7', 'CD57', 'Cd4', 'CD3', 'Ki67', 'Cd19', 'CD28', 'CCR5', ...

external: FeatureSet(id='e6G9UL4bgQ79lx0A719H', n=2, registry='core.Feature', hash='4cUW5o4hYSyrIE3NTHiK', updated_at=2023-09-27 19:03:52, modality_id='2CEhNp28', created_by_id='DzTjkKse')

🔗 assay (1, bionty.ExperimentalFactor): 'fluorescence-activated cell sorting'

🔗 species (1, bionty.Species): 'human'

View data flow:

file.view_flow()

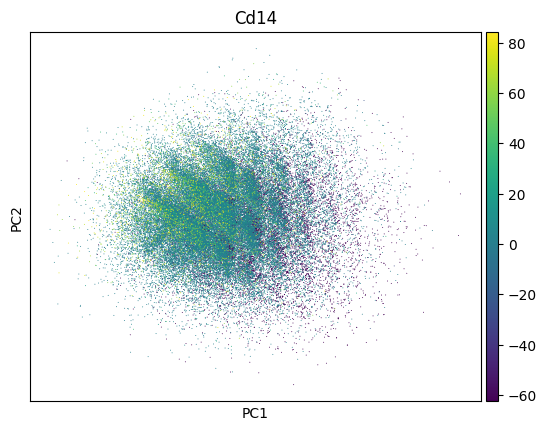

Inspect a PCA fo QC - this dataset looks much like noise:

import scanpy as sc

sc.pp.pca(adata)

sc.pl.pca(adata, color=markers.cd14.name)

Create a new version of the dataset by appending a file#

Query the old version:

dataset_v1 = ln.Dataset.filter(name="My versioned FACS dataset").one()

dataset_v2 = ln.Dataset(

[file, dataset_v1.file], is_new_version_of=dataset_v1, version="2"

)

dataset_v2

Dataset(id='8RZdIbll16NTrAwo7l1h', name='My versioned FACS dataset', version='2', hash='H2N4gXSjQN7Qy3LOcETW', transform_id='SmQmhrhigFPLz8', run_id='iKuM6oyOKGVBDvx0YQ7P', initial_version_id='8RZdIbll16NTrAwo7lRL', created_by_id='DzTjkKse')

dataset_v2.features

---------------------------------------------------------------------------

AttributeError Traceback (most recent call last)

File /opt/hostedtoolcache/Python/3.9.18/x64/lib/python3.9/site-packages/IPython/core/formatters.py:708, in PlainTextFormatter.__call__(self, obj)

701 stream = StringIO()

702 printer = pretty.RepresentationPrinter(stream, self.verbose,

703 self.max_width, self.newline,

704 max_seq_length=self.max_seq_length,

705 singleton_pprinters=self.singleton_printers,

706 type_pprinters=self.type_printers,

707 deferred_pprinters=self.deferred_printers)

--> 708 printer.pretty(obj)

709 printer.flush()

710 return stream.getvalue()

File /opt/hostedtoolcache/Python/3.9.18/x64/lib/python3.9/site-packages/IPython/lib/pretty.py:410, in RepresentationPrinter.pretty(self, obj)

407 return meth(obj, self, cycle)

408 if cls is not object \

409 and callable(cls.__dict__.get('__repr__')):

--> 410 return _repr_pprint(obj, self, cycle)

412 return _default_pprint(obj, self, cycle)

413 finally:

File /opt/hostedtoolcache/Python/3.9.18/x64/lib/python3.9/site-packages/IPython/lib/pretty.py:778, in _repr_pprint(obj, p, cycle)

776 """A pprint that just redirects to the normal repr function."""

777 # Find newlines and replace them with p.break_()

--> 778 output = repr(obj)

779 lines = output.splitlines()

780 with p.group():

File /opt/hostedtoolcache/Python/3.9.18/x64/lib/python3.9/site-packages/lamindb/dev/_feature_manager.py:166, in FeatureManager.__repr__(self)

164 def __repr__(self) -> str:

165 if len(self._feature_set_by_slot) > 0:

--> 166 return print_features(self._host)

167 else:

168 return "no linked features"

File /opt/hostedtoolcache/Python/3.9.18/x64/lib/python3.9/site-packages/lamindb/dev/_feature_manager.py:105, in print_features(self)

103 features_lookup = Feature.lookup()

104 for slot, feature_set in self.features._feature_set_by_slot.items():

--> 105 if feature_set.registry != "core.Feature":

106 key_split = feature_set.registry.split(".")

107 orm_name_with_schema = f"{key_split[0]}.{key_split[1]}"

AttributeError: 'str' object has no attribute 'registry'

dataset_v2

Dataset(id='8RZdIbll16NTrAwo7l1h', name='My versioned FACS dataset', version='2', hash='H2N4gXSjQN7Qy3LOcETW', transform_id='SmQmhrhigFPLz8', run_id='iKuM6oyOKGVBDvx0YQ7P', initial_version_id='8RZdIbll16NTrAwo7lRL', created_by_id='DzTjkKse')

dataset_v2.save()

dataset_v2.labels.add(efs.fluorescence_activated_cell_sorting, features.assay)

dataset_v2.labels.add(species.human, features.species)

dataset_v2.view_flow()